# Linux系统准备

### *集群名称:hadoop01、hadoop02、hadoop03*

### *Linux系统版本:CentOS 7*

### *linux账户:persagy*

1. 安装虚拟机

2. 配置静态IP

3. 修改主机名

4. 修改/etc/hosts文件映射主机名与IP

192.168.xxx.xxx hadoop01

192.168.xxx.xxx hadoop02

192.168.xxx.xxx hadoop03

5. 创建用户

useradd persagy

passwd 自定义

在 /etc/sudoers文件中加入 persagy ALL=(ALL) NOPASSWD:ALL

6. 关闭防火墙服务以及开机自启动

7. root和persagy的SSH无密登录配置

1. 每个节点执行:ssh-keygen -t rsa

2. 将公钥拷贝到要免密登录的目标机器上

ssh-copy-id hadoop01

ssh-copy-id hadoop02

ssh-copy-id hadoop03

8. 集群时间同步

9. opt目录创建文件夹(*此目录可以自定义*)

1. mkdir /opt/software /opt/module

2. chown persagy:persagy /opt/software /opt/module

10. 安装JDK(*文档以 jdk1.8.0_121 为准,jdk版本可在兼容hadoop的情况下自行选择*)

1. tar -zxvf jdk-8u121-linux-x64.tar.gz -C /opt/module

2. /etc/profile 配置环境变量

```shell

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_121

export PATH=$PATH:$JAVA_HOME/bin

```

# 集群部署计划及软件版本

## 集群部署计划

| | hadoop01 | hadoop02 | hadoop03 |

| ----------------------- | ---------------------- | -------------------------------- | ------------------------------- |

| Hadoop-hdfs | nameNode

dataNode | dataNode | dataNode

secondaryNameNode |

| Hadoop-yarn | nodeManager | nodeManager

resourceManager | nodeManager |

| zookeeper | ✅ | ✅ | ✅ |

| flume | 采集 | 采集 | 传输 |

| kafka | ✅ | ✅ | ✅ |

| hbase | ✅ | ✅ | ✅ |

| spark | ✅ | ✅ | ✅ |

| hive | ✅ | | |

| MySQL(保存hive元数据) | ✅ | | |

## 软件版本

| 软件 | 版本号 | 备注 |

| --------- | ------------------------------------------------------------ | ---------------------------------------------------- |

| Java | jdk-8u121-linux-x64.tar.gz | |

| Hadoop | hadoop-2.7.2.tar.gz | |

| Hive | apache-hive-1.2.1-bin.tar.gz | 可用DataGrip或者DBeaver连接,需要启动hiveserver2服务 |

| flume | apache-flume-1.7.0-bin.tar.gz | |

| kafka | kafka_2.11-0.11.0.0.tgz | |

| hbase | | |

| zookeeper | 3.5.7 | |

| spark | | |

| mysql | mysql-community-client-5.7.32-1.el7.x86_64.rpm

mysql-community-server-5.7.32-1.el7.x86_64.rpm

mysql-connector-java-5.1.49( <= 放入hive的lib文件) | |

| | | |

# Zookeeper安装

#### *persagy用户操作*

1. 解压

2. 修改zookeeper中conf下的配置文件zoo.cfg

```shell

cp zoo_sample.cfg zoo.cfg

```

```xml

dataDir=/opt/module/zookeeper-3.4.10/zkData

ZOO_LOG_DIR=/opt/module/zookeeper-3.4.10/logs

## 在zkData目下创建myid,内容是本机的 serverId;以下配置1、2、3就是 serverId

server.1=hadoop01:2888:3888

server.2=hadoop02:2888:3888

server.3=hadoop03:2888:3888

```

3. 创建 /opt/module/zookeeper-3.4.10/zkData 和 /opt/module/zookeeper-3.4.10/logs 目录

# Hadoop集群安装

#### *persagy用户操作*

1. 解压

```shell

tar -zxvf hadoop-2.7.2.tar.gz -C /opt/module/

```

2. /etc/profile 配置环境变量

```shell

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

```

3. 修改 Hadoop 配置文件(目录:/opt/module/hadoop-2.7.2/etc/hadoop)每个节点部署配置相同

1. core-site.xml

```xml

fs.defaultFS

hdfs://hadoop01:9000

hadoop.tmp.dir

/opt/module/hadoop-2.7.2/data/tmp

io.compression.codecs

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec

io.compression.codec.lzo.class

com.hadoop.compression.lzo.LzoCodec

```

2. hdfs-site.xml

```xml

dfs.replication

3

dfs.namenode.secondary.http-address

hadoop03:50090

```

3. yarn-site.xml

```xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.hostname

hadoop02

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

```

4. mapred-site.xml(*需要 cp mapred-site.xml.template mapred-site.xml*)

```xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hadoop03:10020

mapreduce.jobhistory.webapp.address

hadoop03:19888

```

5. hadoop-env.sh

```shell

# The java implementation to use.

export JAVA_HOME=/opt/module/jdk1.8.0_121

```

6. yarn-env.sh

```shell

# some Java parameters

export JAVA_HOME=/opt/module/jdk1.8.0_121

```

7. mapred-env.sh

```shell

# limitations under the License.

export JAVA_HOME=/opt/module/jdk1.8.0_121

```

8. slaves

```xml

hadoop01

hadoop02

hadoop03

```

4. ***(重点)nameNode配置在hadoop01节点,第一次启动之前需要格式化:hdfs namenode -format***

5. 启动Hadoop

1. 在hadoop01节点/opt/module/hadoop-2.7.2/sbin/start-dfs.sh

2. 在hadoop02节点/opt/module/hadoop-2.7.2/sbin/start-yarn.sh

6. 可通过jps在三个节点查看启动情况

# Hive安装

## 安装Hive

1. 解压

```shell

tar -zxvf apache-hive-1.2.1-bin.tar.gz -C /opt/module/hive

```

2. 配置hive-env.xml文件

```shell

mv hive-env.sh.template hive-env.sh

```

```shell

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export HIVE_CONF_DIR=/opt/module/hive/conf

```

3. Hadoop集群配置

1. *启动hdfs和yarn*

2. 在hdfs上创建 /tmp 和 /user/hive/warehouse 目录,同事修改组权限为可写

```shell

[persagy@$hostname hadoop-2.7.2]$ bin/hadoop fs -mkdir /tmp

[persagy@$hostname hadoop-2.7.2]$ bin/hadoop fs -mkdir -p /user/hive/warehouse

[persagy@$hostname hadoop-2.7.2]$ bin/hadoop fs -chmod g+w /tmp

[persagy@$hostname hadoop-2.7.2]$ bin/hadoop fs -chmod g+w /user/hive/warehouse

```

## 安装MySQL

#### 存放Hive元数据,*root用户操作*

1. 检查是否安装MySQL

1. rpm -qa|grep mysql

2. 卸载安装的版本 rpm -e --nodeps

2. 安装服务端

```shell

1. rpm -ivh mysql-community-server-5.7.32-1.el7.x86_64.rpm

2. 初始密码:grep 'temporary password' /var/log/mysqld.log

```

3. 安装客户端

```shell

rpm -ivh mysql-community-client-5.7.32-1.el7.x86_64.rpm

```

4. 修改user表,把Host表内容修改为%

```mysql

update user set host='%' where host='localhost';

flush privileges;

```

5. 配置Hive元数据到MySQL

1. copy驱动包 mysql-connector-java-5.1.49-bin.jar 到 /opt/module/hive/lib/

2. 修改 hive-site.xml

```xml

javax.jdo.option.ConnectionURL

jdbc:mysql://hadoop01:3306/metastore?createDatabaseIfNotExist=true

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

user

username to use against metastore database

javax.jdo.option.ConnectionPassword

passwd

password to use against metastore database

```

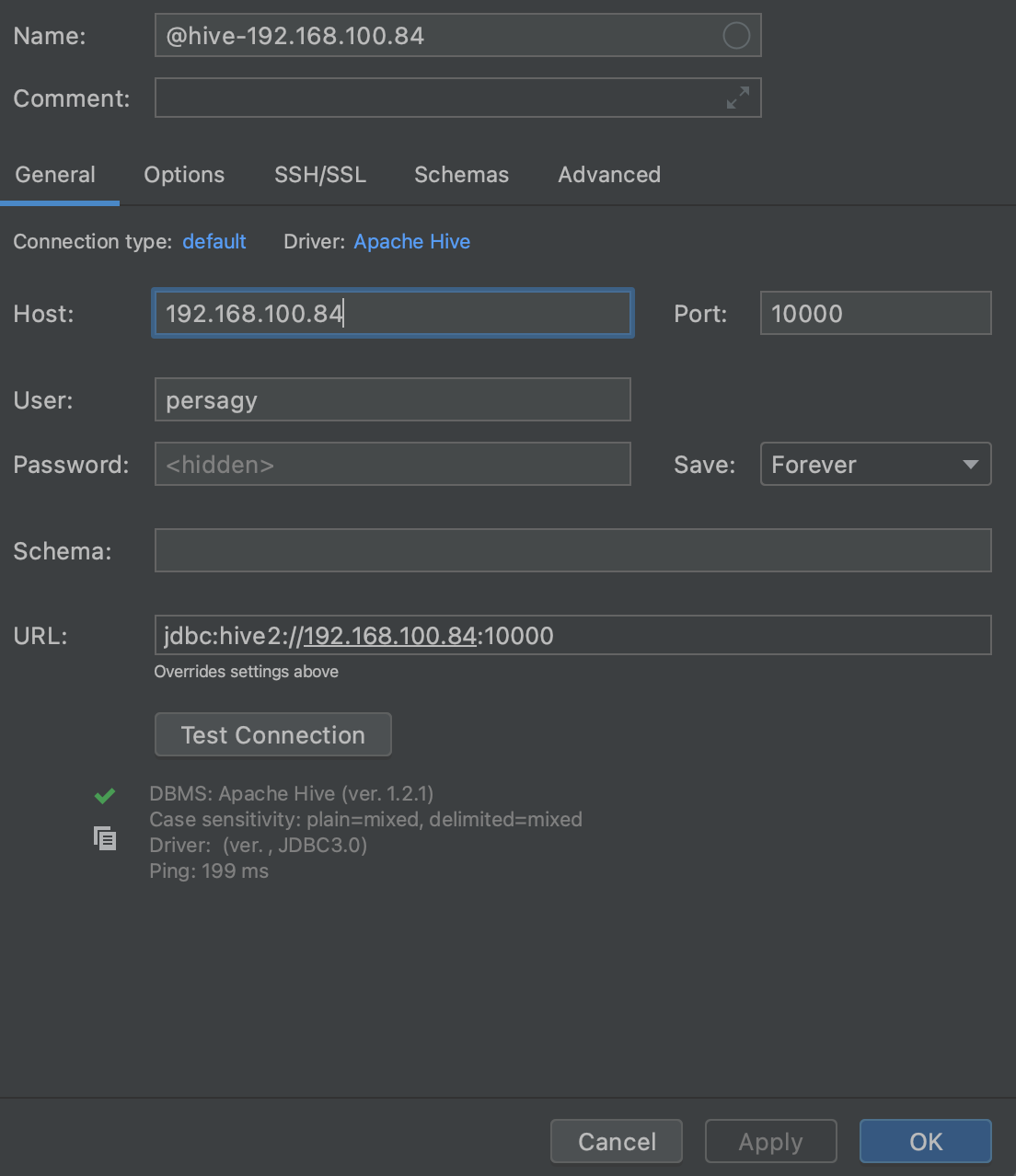

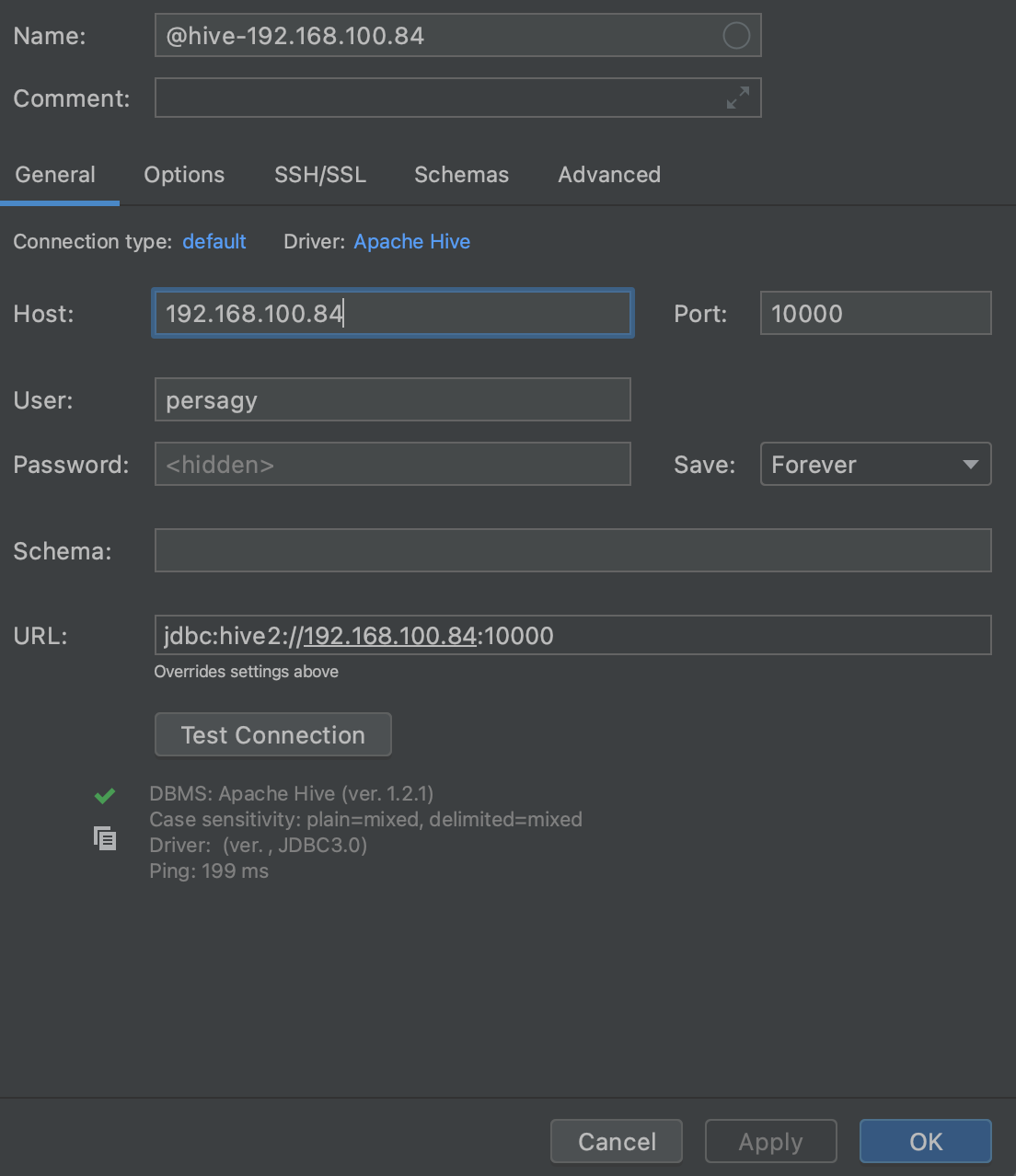

6. HiveJDBC访问

1. 启动 hiveserver2 服务

```shell

nohup bin/hiveserver2 > hiveserver2.out 2> hiveserver2.err &

```

2. 以 DataGrip 为例

1. 引入jar包,hive-jdbc-uber-2.6.5.0-292.jar(根据版本引入) hive-jdbc-uber-2.6.5.0-292.jar

2. 配置如图: